Background

Over the past few months, we've been diligently working on upgrading our platform from version 10.1.1 to 10.3.1. If you are using Publishing Service, you are required to upgrade to upgrade it to version 7.x per Sitecore's compatibility article: https://support.sitecore.com/kb?id=kb_article_view&sysparm_article=KB0761308

The Problem

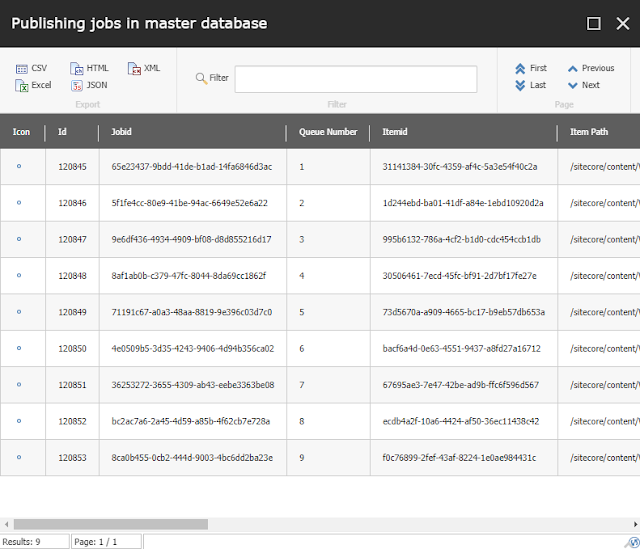

We followed the upgrade steps very carefully, however we noticed that if we did a big related item publish (home related and children) the following error was thrown, and the publish would fail:

[Error] Error in the "VariantsRelatedNodesTargetProducer"

System.InvalidOperationException: The ConnectionString property has not been initialized.

at System.Data.SqlClient.SqlConnection.PermissionDemand()

at System.Data.SqlClient.SqlConnectionFactory.PermissionDemand(DbConnection outerConnection)

at System.Data.ProviderBase.DbConnectionInternal.TryOpenConnectionInternal(DbConnection outerConnection, DbConnectionFactory connectionFactory, TaskCompletionSource`1 retry, DbConnectionOptions userOptions)

at System.Data.ProviderBase.DbConnectionClosed.TryOpenConnection(DbConnection outerConnection, DbConnectionFactory connectionFactory, TaskCompletionSource`1 retry, DbConnectionOptions userOptions)

at System.Data.SqlClient.SqlConnection.TryOpen(TaskCompletionSource`1 retry)

at System.Data.SqlClient.SqlConnection.OpenAsync(CancellationToken cancellationToken)

--- End of stack trace from previous location ---

at Sitecore.Framework.TransientFaultHandling.Sql.SqlRetryHelper.<>c__DisplayClass8_0`1.<b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Sitecore.Framework.TransientFaultHandling.Sql.SqlRetryHelper.ExecuteAsync[T](DbConnection connection, Func`1 sqlWork, Func`3 commandRetryPolicy, CancellationToken cancellationToken)

at Sitecore.Framework.Publishing.Data.AdoNet.DatabaseConnection`1.ExecuteAsync[T](Func`2 dbWork)

at Sitecore.Framework.Publishing.Data.Classic.ClassicItemRelationshipRepository.GetAllRelationships(String source, IReadOnlyCollection`1 uris, IReadOnlyCollection`1 outFieldIdsWhitelist, IReadOnlyCollection`1 inFieldIdsWhitelist, Predicate`1 outRelPostFilter, Predicate`1 inRelPostFilter)

at Sitecore.Framework.Publishing.ManifestCalculation.PublishCandidateSource.GetRelatedNodes(IReadOnlyCollection`1 locators, Boolean includeRelatedContent, Boolean includeClones)

at Sitecore.Framework.Publishing.ManifestCalculation.VariantsRelatedNodesTargetProducer.ProcessCandidatesBatch(IList`1 locators)

System.OutOfMemoryException: Exception of type 'System.OutOfMemoryException' was thrown.

at System.Collections.Generic.Dictionary`2.Initialize(Int32 capacity)

at System.Collections.Generic.Dictionary`2..ctor(Int32 capacity, IEqualityComparer`1 comparer)

--------------------------------------------

System.OutOfMemoryException: Exception of type 'System.OutOfMemoryException' was thrown.

at System.Collections.Generic.Dictionary`2.Resize(Int32 newSize, Boolean forceNewHashCodes)

at System.Collections.Generic.Dictionary`2.Resize()

at System.Collections.Generic.Dictionary`2.TryInsert(TKey key, TValue value, InsertionBehavior behavior)